Voilà! I know, I know—you were expecting this article last Saturday. But here we are now. What happened? Well, it turns out life had a little detour planned for me. It wasn’t just about slipping back into normal routines; oh no, procrastination played its part. And if you’re curious, a particularly bold mosquito decided to sacrifice itself by injecting me with a lovely little Plasmodium surprise. Yep, malaria. Goodbye December plans, goodbye learning momentum, and hello unexpected bedrest.

But don’t worry—after plenty of rest and recovery, I’m back to being fully fit and ready to roll. So, let’s leave the past in the past and dive into all the good stuff, shall we?

The tales of network layer

After shaking off the malaria blues, I found myself diving headfirst into the fascinating world of the network layer. You know, that magical layer in the OSI model that’s the unsung hero of internet communication. For those who might need a quick OSI refresher: the network layer sits at the 3rd spot from the bottom (or the 4th from the top, depending on how you count). Its job? As its name not-so-subtly hints, it’s all about getting data packets from one host to another in the network.

Think of it as the ultimate travel agent, planning the perfect route for your precious data.

The network layer doesn’t just play messenger; it’s also a bit of a genius itself. To make sure your data packet gets to its destination as quickly as possible, this layer works hard to find the shortest route. And to avoid the classic “Wait, who’s this for?” scenario, it uses something called the Internet Protocol (IP). This nifty system assigns a unique IP address to every host, ensuring your data knows exactly where to go—kind of like giving every house in a city its own address. Efficient and organized, just the way we like it.

The world currently runs on IPv6—v6 stands for version 6—which uses a 128-bit addressing scheme. But before we jump headfirst into the shiny new IPv6, let’s rewind a bit and talk about its predecessor: IPv4. Why?(Because understanding the simpler, older sibling helps us appreciate why we needed an upgrade in the first place). IPv4 uses a 32-bit addressing scheme, which worked fine back in the day when the internet was just a cosy little network. But with the explosion of devices, from smartphones to smart toasters, we quickly ran out of addresses. Enter IPv6, our internet’s shiny new saviour.

IPv4 was all about organizing addresses into five subclasses: Class A, B, C, D, and E. For now, let’s focus on Class A, the VIP of the bunch.

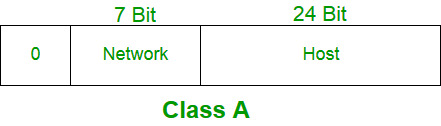

Class A

In Class A, the network ID takes up 8 bits, while the host ID gets the remaining 24 bits. But there’s a catch—the first bit of the network ID must always be 0. Because of this, we can have a maximum of 27−22^7 - 227−2 networks (two addresses are reserved: one to identify the network itself and one for broadcasting). For hosts, Class A supports up to 224−22^{24} - 2224−2 addresses per network.

The subnet mask for Class A looks like this: 255.x.x.x. Fun fact? Class A contains nearly half of all possible IPv4 addresses in the entire classful scheme. It was designed for massive networks—think governments and major corporations—but for the rest of us, it’s kind of overkill.

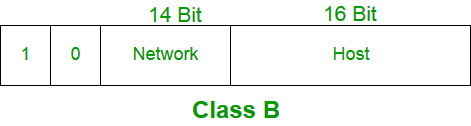

Class B

Next up, we have Class B, which strikes a balance between the extremes of Class A and the smaller subclasses. In Class B, both the network ID and the host ID are split right down the middle, with 16 bits each.

Because the network ID is bigger than in Class A, Class B can accommodate more networks but fewer hosts per network—roughly half as many hosts as Class A, to be exact. To keep things organized, the first two bits of the network ID are always fixed as 10.

The subnet mask for Class B? 255.255.x.x. It’s perfect for medium-sized networks—think universities or mid-sized companies. Not too big, not too small, just right!

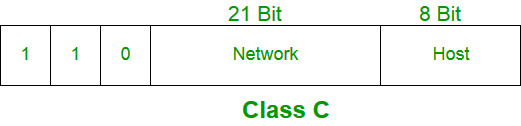

Class C

Moving along, we have Class C, where the trend continues: more bits for the network ID, fewer bits for the host ID. In this setup, the network ID takes up a whopping 24 bits, while the host ID gets only 8 bits.

This means Class C can support a huge number of networks but accommodates far fewer hosts per network compared to Classes A and B. And just like its predecessors, Class C has a signature feature—the first three bits of the network ID are always set to 110.

The subnet mask for Class C? 255.255.255.x. This makes it the go-to class for smaller networks, like those used by small businesses or local organizations.Think of it like the 1BHK apartments in Mumbai—compact, efficient, and perfect for limited space, especially if you’re a bachelor with just a few devices to connect.

Class D and Class E

Class D is reserved for multicast purposes, which means it’s used to send data to multiple recipients at once (think of it as shouting to a group of people instead of just one). The first four bits of the network ID are always 1110, and the rest are used for the host ID.

On the other hand, Class E is fully reserved for experimental or future use. The first four bits are set to 1111, with the rest allocated to the host ID.

Summary of classful addressing

So, why did we need classless addressing or CIDR (Classless Inter-Domain Routing)? The reason boils down to inefficiency. While Class A and Class B addresses were generously large, millions of those addresses ended up going to waste. Class A, with its vast number of hosts, was great for massive networks, but way overkill for smaller ones. Class B wasn’t much better, and Class C, well, it was just too small to handle growing demands.

In short, Class C was too cramped, and the others were too spacious, creating a ton of wasted IP address space.

Subnetting and Classless addressing

In the previous text, I mentioned something called the subnet mask. But wait—what exactly is a subnet? Good question! A subnet is simply a smaller, more manageable chunk of a larger block of IP addresses. When you divide a big block of addresses into smaller, contiguous networks that can be assigned to individual organizations (or even departments within a company), that’s called subnetting.

Think of it like taking a large pizza (the big block of addresses) and cutting it into smaller slices (subnets) so everyone gets a piece that fits their needs. Subnetting helps avoid wasting IP addresses and allows more efficient use of network resources.

Let’s dig into classless addressing and see how it works its magic. Unlike the rigid system of classful addressing, where the number of network and host IDs was fixed, classless addressing gives us flexibility. Here, we can convert host IDs into network IDs based on our needs. It’s like rearranging your closet to prioritize the essentials—it’s all about efficiency!

To make this work, we define something called a subnet mask. But in classless addressing, the subnet mask isn’t tied to a specific class like in Class A, B, or C. Instead, it can be customized to suit the exact size of the network. This approach helps allocate IP addresses more effectively, reducing waste and giving networks exactly what they need—no more, no less.

Network Address Translation

Now, let’s talk about Network Address Translation—or NAT, as the cool kids call it. At its core, NAT is like the receptionist at a busy office. Imagine you have a bunch of private IP addresses (devices) inside your network, all wanting to connect to the internet. But instead of each device having its own public IP address (which would be impractical and wasteful), NAT steps in to help.

With NAT, all those private IP addresses can share a single public IP address to access the internet. It’s like a whole group of people sharing one outgoing phone line. From the outside, it looks like one entity (the public IP), but inside, everyone’s happily communicating through their private IPs.

This not only saves precious IP addresses but also adds a layer of security by keeping your internal network hidden from prying eyes on the internet.

Different Routing Protocol

Distance Vector Routing Protocol

Let’s talk about the Distance Vector Routing Protocol. It’s based on the basics of the Bellman-Ford algorithm, and the idea behind it is refreshingly simple. Each router knows the distance to its neighbors, and it uses that info to calculate the shortest path to all the routers in the network. Packets then travel along these shortest paths. Easy-peasy, right?

But hold up—there’s a catch. And it’s a big one. Here’s the problem: good news travels fast, bad news crawls like a snail. (Pretty much the opposite of how things go in my life!)

What does this mean? Adding a new router to the network is smooth and happens quickly. But when a router breaks or goes offline? Yikes. This "bad news" takes its sweet time to propagate to the other routers. Why? Because the protocol keeps updating distances in small steps, leading to a dreaded problem called count to infinity. In simpler terms, routers keep guessing that a broken route might still exist until they eventually realize it’s gone for good. Spoiler alert: this is neither efficient nor ideal.

Link State Routing Protocol

Now, let’s switch gears to the second routing technique: the Link State Routing Protocol. This one is a bit fancier and uses Dijkstra’s algorithm to create the routing table (yes, the one that finds the shortest path—thank you, computer science legend).

Here’s how it works: every router gathers full knowledge of the network by sharing information with all the other routers. How? Through a technique called flooding. Essentially, each router tells its neighbors about the network state, and that information spreads across the network like wildfire—kind of like how people share rumors about me throughout the entire circle. 🤦♂️. This way, every router knows the entire map of the network and can independently compute the best routes.

The result? Faster convergence! (Translation: routers quickly agree on the network's state and adapt to changes.) But, of course, there’s a trade-off—this technique requires more bandwidth because of all the data being shared.

One of the best-known implementations of this protocol is OSPF (Open Shortest Path First). It’s widely used in larger, more complex networks where speed and accuracy are critical.

Conclusion

So, thank you for taking the time to read this article! I genuinely hope you found it informative, and that the little humorous touches helped keep you engaged along the way. This article took a bit of time and effort because I wanted to create something that stood out in a world where AI-generated content is everywhere. I really aimed for it to feel more personal, thoughtful, and authentic. If you have any feedback or suggestions for improvement, I'd love to hear them—it's all about growing and learning together.

Wishing you an amazing week, month, and year ahead! May your networks be fast, your packets never get lost, and your routers stay online. And before I forget—Good morning! And in case I don’t see ya, good afternoon, good evening, and good night! 🎥🌟 (A little nod to The Truman Show—hope you caught the reference!)

Keep learning, keep exploring, and I’ll see you in the next one!